Lane-level scene annotations provide invaluable

data in autonomous vehicles for trajectory planning in complex environments such as urban areas and cities. However,

obtaining such data is time-consuming and expensive since lane

annotations have to be annotated manually by humans and are

as such hard to scale to large areas.

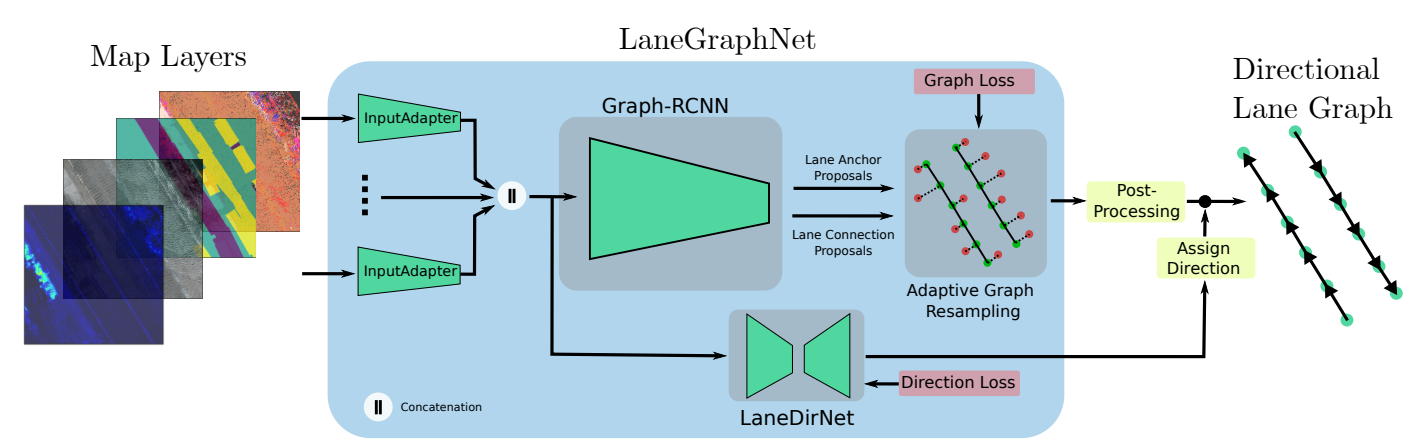

In this work, we propose a

novel approach for lane graph estimation from bird’s-eye-view images. We formulate the problem of lane shape and lane

connections estimation as a graph estimation problem where

lane anchor points are graph nodes and lane segments are graph

edges. We train a graph estimation model on bird’seye-view data processed from the popular NuScenes dataset and

its map expansion pack. We furthermore estimate the direction

of the lane connection for each lane segment with a separate

model which results in a directed lane graph.

We illustrate the

performance of our LaneGraphNet model on the challenging

NuScenes dataset and provide extensive qualitative and quantitative evaluation. Our model shows promising performance for

most evaluated urban scenes and can serve as a step towards

automated generation of HD lane annotations for autonomous

driving.